Building an AI Agent Framework - Why I'm Starting Over

From sequential loops to event-driven architecture - learning why simple isn't always enough when building production AI agents

How it all started

4 months ago, I built HICA because I was frustrated.

LangGraph felt like overkill. CrewAI was doing too much. Every framework I tried had these grand abstractions for "agent graphs" and "workflows" that made simple things complicated and complicated things impossible. None of the abstractions felt right. Everything was hidden by the library

I wanted something minimal. Something understandable. Something you could actually use to build real AI applications without fighting the framework.

So I built HICA. And for a while, it felt like the right choice. I was proud of building something simple yet could handle user clarification, MCP , async workflows in a agent loop that can be pause and resumed.

Then I tried to build a coding agent with it.

And everything fell apart.

The Dream: A Simple Agent Framework

Here's what I wanted HICA to be:

The Core Loop: Thought → Action → Observation

The central idea was beautiful in its simplicity:

1. User sends message → stored as Event in Thread

2. Agent thinks → "What tool should I use?" (LLM call)

3. Agent generates parameters → "How should I call it?" (LLM call)

4. Agent executes tool → gets result

5. Agent observes → "What's next?" (repeat from step 2)

6. Agent finishes → "done" or "clarification"

Every step logged as an Event in a Thread (the conversation history). Clean, traceable, resumable.

What Made HICA Different

Event-Sourced Everything

thread = Thread(events=[ Event(type="user_input", data="Calculate 2+2"), Event(type="llm_response", data={"intent": "add", "arguments": {}}), Event(type="tool_response", data={"result": 4}), Event(type="llm_response", data={"intent": "done"}) ])

Every interaction became a permanent record. Save the thread, resume it later. Full audit trail. You can either pass the entire thread in the context window or choose what to send.

Separation of Routing and Execution

The agent loop was split into clean phases:

select_tool()- LLM decides what to do ("use add tool" or "done" or "ask for clarification")fill_parameters()- LLM decides how to do it ({"a": 2, "b": 2})execute_tool()- Actually run the tool

Each phase was a separate LLM call with its own prompt. Explicit. Debuggable. No magic.

Human-in-the-Loop Built In

async for _ in agent.agent_loop(thread): pass # Check if agent needs help if thread.awaiting_human_response(): # Agent said "clarification" - it's paused # User can provide input thread.append_event(Event(type="user_input", data="Here's the info")) # Resume from where it stopped async for _ in agent.agent_loop(thread): pass

The agent could stop mid-execution and ask for help. No hallucinating missing data. Just pause, ask, resume.

Tool Flexibility: Local + MCP Unified

This was one of HICA's best features. Most frameworks make you choose: either local Python tools OR remote services. HICA unified them:

# Define local tools with a decorator @registry.tool() def add(a: int, b: int) -> int: "Add two numbers" return a + b # Configure MCP server (runs SQLite tools as subprocess) mcp_config = { "mcpServers": { "sqlite": { "command": "uvx", "args": ["mcp-server-sqlite", "--db-path", "/data/db.sqlite"] } } } # Connect and load MCP tools mcp_manager = MCPConnectionManager(mcp_config) await mcp_manager.connect() await registry.load_mcp_tools(mcp_manager) # Now registry has: add (local) AND create_table, query_db (MCP) # Agent doesn't care which is which agent = Agent(config=config, tool_registry=registry)

Why this mattered: MCP tools run as separate processes (safer, isolated), but the agent treats them identically to local functions. Same registry, same execution flow.

MCP (Model Context Protocol) lets you run tools as external processes:

- SQLite queries in isolated subprocess

- File operations in sandboxed environment

- Tools written in any language (call Rust/Go from Python)

- Clean separation of concerns

HICA made MCP a first-class citizen - the tool registry abstracted away whether tools were local functions or MCP subprocesses.

That's it. Give it a thread with user input, let it run until done. The loop handles everything: thinking, tool calls, results, deciding what's next.

And you know what? HICA does all of this. The README looks great. The examples work.

But then I tried to build something real with it.

The Reality: When Simple Isn't Enough

I wanted to build a coding agent.

- Streams code as it writes it (like Cursor or Copilot)

- Lets you see what it's thinking in real-time

- Asks for approval before running commands

- Lets you hit "Stop" if it goes off the rails

Turns out, HICA can't do any of that.

Problem 1: The Frozen Screen

Here's what using HICA feels like:

You: "Refactor this 500-line file"

[15 seconds of nothing]

[Entire response appears at once]

You: "...was this thing even working?"

HICA doesn't stream. It waits for the entire LLM response, then shows it all at once.

In demos, this is fine. In production, it looks broken.

Problem 2: The Unstoppable Agent

Here's what happened when I tried to add a "Stop" button:

LLM: "Let me refactor your entire codebase..."

You: [clicks STOP]

HICA: [continues for 2 more minutes]

You: [force quits application]

There's no way to interrupt HICA mid-operation. Once it starts, it runs until it's done.

This isn't just annoying. It's unusable for coding agents where mistakes are expensive.

Problem 3: The Message That Got Lost

This one hurt:

Agent: [Starts analyzing codebase - takes 30 seconds]

You: [Types "Actually, skip the analysis"]

HICA: [Your message vanishes into the void]

Agent: [Finishes analysis like nothing happened]

HICA processes one thing at a time. If you send a message while it's busy, that message is just... lost.

Imagine telling that to a user: "Sorry, the agent was busy. Try again."

The Moment I Realized I Was Wrong

I kept making changes to HICA's core. Adding hacks. Special cases. The codebase was starting to look like the frameworks I hated.

I watched Vaibhav's videos from Boundary. He was talking about event-driven architectures. About how real systems handle concurrency. About patterns used by companies shipping real AI products.

And it clicked.

I wasn't building a framework that was too simple. I was building a framework with the wrong architecture.

HICA's sequential loop model is fine for:

- Demos

- Batch processing

- One-off scripts

- CLIs where users wait for completion

But it fundamentally can't handle:

- Real-time streaming

- User interrupts

- Concurrent operations

- Multiple users

And those aren't optional for a production coding agent. They're the baseline.

The Journey to Fixing HICA : What I Tried

I tried to fix HICA's problems with simpler solutions first. Here's what I learned from the failures.

Attempt 1: Global Callback Functions

The Idea: Just add callbacks for streaming, right?

async def agent_loop(thread, on_chunk=None): while True: response = await select_tool(thread) # Stream chunks via callback async for chunk in llm.stream(...): if on_chunk: on_chunk(chunk) # Send to UI

Why It Failed:

- How do I send callbacks from WebSocket handler to agent loop?

- What if I want multiple subscribers (logs, metrics, UI)?

- Callbacks create tight coupling - Agent now knows about UI

- Can't add new subscribers without modifying

agent_loop()

The Lesson: Callbacks are just hidden dependencies.

Attempt 2: Inject WebSocket into Agent

The Idea: Pass the WebSocket connection to the agent directly.

agent = Agent(config, tool_registry, websocket=ws) async def agent_loop(self, thread): async for chunk in llm.stream(...): await self.websocket.send(chunk) # Direct send

Why It Failed:

- How do I test this? Mock the entire WebSocket?

- What about multiple clients? Pass a list of WebSockets?

- Agent is now responsible for network communication - violates single responsibility

- Can't run agent without WebSocket (breaks batch mode, CLI)

The Lesson: Direct dependencies make testing impossible.

Attempt 3: Shared State Object

The Idea: Use a shared mutable object for real-time state.

class SharedState: current_message: str = "" is_streaming: bool = False state = SharedState() # Agent writes to it async def agent_loop(thread, state): state.is_streaming = True async for chunk in llm.stream(...): state.current_message += chunk # WebSocket reads from it async def websocket_handler(websocket, state): while True: await websocket.send(state.current_message) await asyncio.sleep(0.1)

Why It Failed:

- Race conditions: Agent writes, WebSocket reads - no locks!

- Who resets

current_messagebetween responses? - How do I handle interrupts? Set

state.should_stop = Trueand hope agent checks it? - Mutable shared state is a debugging nightmare

The Lesson: Shared mutable state scales to chaos.

Attempt 4: Message Queue Between Components

The Idea: Agent writes to a queue, WebSocket reads from it.

queue = asyncio.Queue() # Agent produces async def agent_loop(thread, queue): async for chunk in llm.stream(...): await queue.put({"type": "chunk", "text": chunk}) # WebSocket consumes async def websocket_handler(websocket, queue): while True: msg = await queue.get() await websocket.send_json(msg)

Why It Almost Worked:

This was the closest! But problems emerged:

- Only one consumer per queue (what about logs, metrics?)

- How do I send user interrupts back to agent? Need a second queue?

- Now I have bidirectional queues - getting complex

- What if agent needs to react to user input mid-stream?

The Realization: I need broadcast (one → many), not queue (one → one).

The Moment It Clicked

I was watching Vaibhav's videos from Boundary about event-driven systems. He mentioned:

"In production systems, you don't want components calling each other. You want them publishing events. Let subscribers decide what they care about."

And it clicked.

What I needed wasn't:

- Agent → WebSocket (tight coupling)

- Agent → Queue → WebSocket (limited to 1-to-1)

What I needed was:

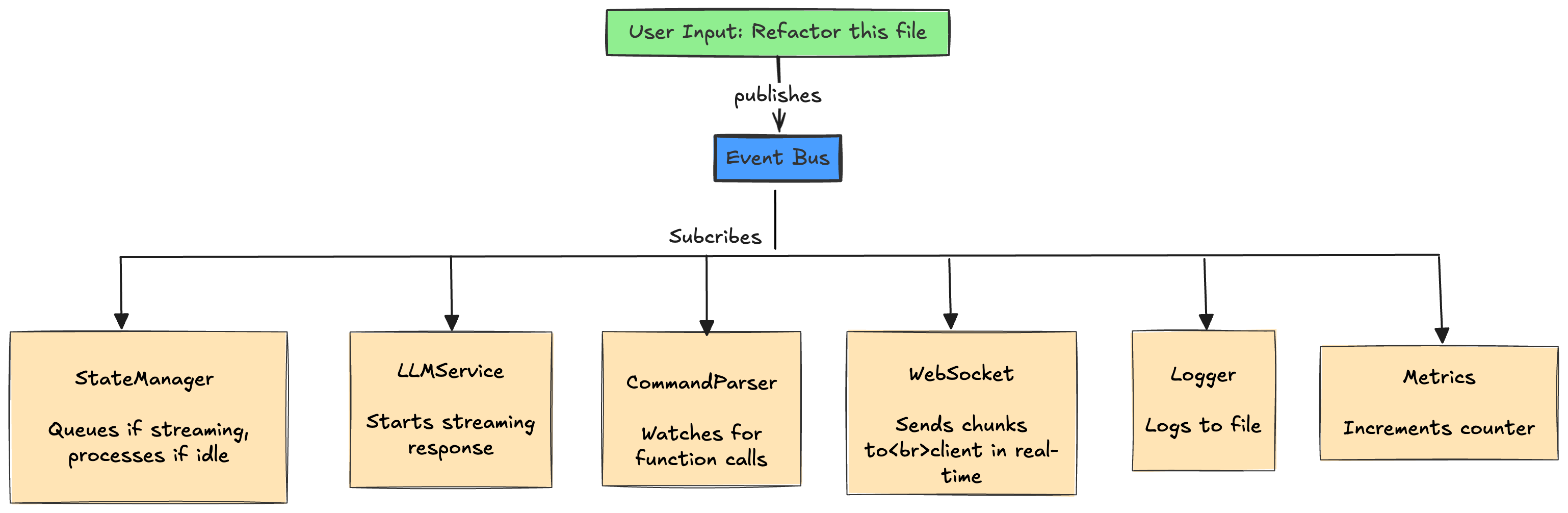

- Agent → EventBus ← WebSocket

- Agent → EventBus ← Logger

- Agent → EventBus ← Metrics

- Agent → EventBus ← CommandParser

An EventBus where:

- Anyone can publish events (user input, LLM chunks, interrupts)

- Anyone can subscribe to events they care about

- No component knows about any other component

- Adding new subscribers doesn't require code changes

--

What EventBus Actually Solves

Key Points:

- All components react concurrently, not sequentially

- No component knows about any other component

- Event Bus decouples producers from consumers

This is event-driven architecture. Services communicate through events. Everything can happen concurrently. Streaming is native. Interrupts are handled gracefully.

And most importantly: I can add new features by adding subscribers, not modifying existing code.

What I'm Building Instead

I'm building a new event-driven agent system from scratch. Not because it's fun (starting over is painful), but because it's the only way to build something that actually works in production.

There are a few components I have figured out. Will be updating about them in the coming posts.

Here are a few key components:

- EventBus: Central nervous system for loose coupling

- Reducers: Predictable state management

- Streaming Services: Real-time incremental updates

- Interrupt Coordination: Graceful cancellation

- Message Queuing: Don't lose user input

- Command Lifecycle: Track requests → approvals → execution

I could've built this quietly and launched a polished v2.0.

When I built HICA, I didn't document why I made certain choices. I didn't share what I learned along the way. And when it turned out to be wrong, there was no record of why it was wrong.

I'm building in public.

Every phase will be:

- Committed to git with detailed messages

- Explained in blog posts

- Documented with architectural decisions

- Tested and runnable at every step

If you're building AI agents, you'll hit these same problems. Maybe you can learn from my mistakes before making them yourself.

I'm doing this in public because I want feedback. I want to know when I'm going down the wrong path. I want to hear from engineers who've solved these problems before.

If you've built AI agents in production, I want to hear about your architecture choices.

If you've struggled with the same problems, let's figure out the solutions together.

If you think I'm overcomplicating things, tell me why.

I'm building this live and would love to hear from you:

- What problems have you hit with existing frameworks?

- What do you wish AI agent libraries had?

- What's the most painful part of building AI apps?

Drop a comment or subscribe to follow along.

You can follow along on:

- GitHub: [https://github.com/sandipan1]

- Substack: [Subscribe for updates]

- Twitter: @sandipuns