(Part 4) Human-in-the-Loop AI with MCP Sampling

Learn how human-in-the-loop systems with MCP Sampling add critical safety, oversight, and control to AI workflows in enterprise environments.

(Part 4) Human-in-the-Loop with MCP Sampling

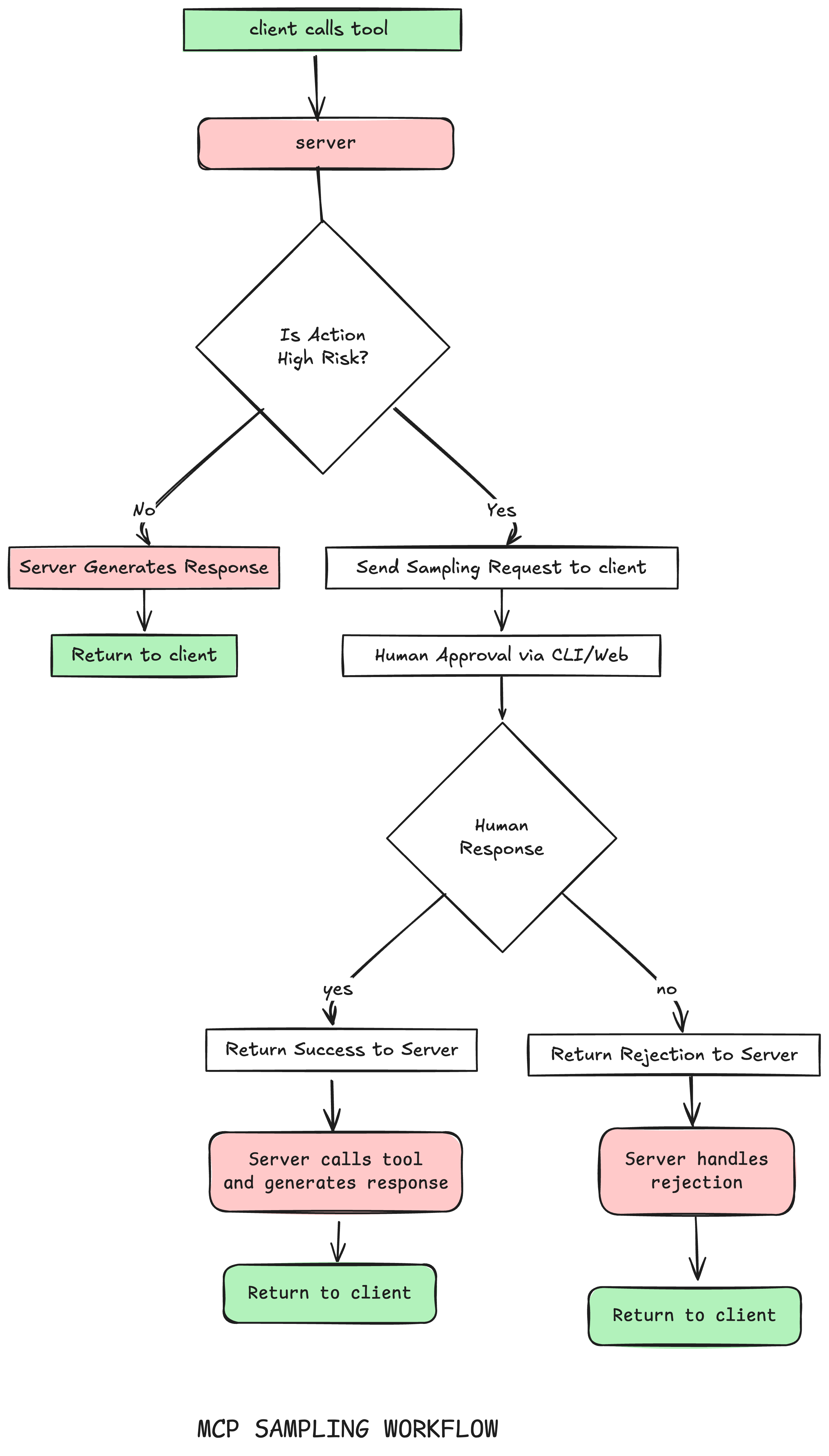

As I've been experimenting with the Model Context Protocol (MCP), I discovered an interesting way to implement human-in-the-loop workflows using LLM sampling. MCP sampling has been made with the intention to allows MCP servers to request the client's LLM to generate text or process data on their behalf.

The server requests LLM sampling, but the client maintains full control over the process.This allows us to bypass direct LLM messaging entirely to enable human approval workflows instead.

This addresses critical safety and control issues while maintaining the efficiency benefits of automation. Rather than choosing between full AI autonomy or manual processes, you can implement intelligent checkpoints where human judgment guides AI decision-making.

Why Human Oversight Matters in AI Systems

Most AI deployments face a fundamental tension: they need enough autonomy to be useful, but too much autonomy creates risk. This tension manifests in several critical ways:

Security Vulnerabilities: Tool poisoning attacks can embed malicious instructions in MCP tool descriptions that are invisible to users but visible to AI models. These hidden instructions manipulate AI models into performing unauthorized actions without user awareness.

Lack of Control: AI systems can make decisions that affect sensitive operations like accessing SSH keys, configuration files, or databases without proper oversight.

Trust Issues: Without human oversight, businesses struggle to deploy AI for high-stakes operations where accuracy and approval are critical.

Risk-Based Approach to MCP Tool Operations

MCP tools can be categorized based on their potential business impact, determining when human approval becomes essential:

Low Stakes Operations

- Read access to public data (Wikipedia searches, public APIs)

- Communication with the agent author (progress updates via Slack)

Medium Stakes Operations

- Read access to private data (emails, calendars, CRM queries)

- Communication with strict rules (pre-approved email templates)

High Stakes Operations

- Communication on behalf of individuals or companies

- Write access to private data (CRM updates, billing changes)

- Financial transactions and payments

High-stakes operations are where this approach provides the most value. These are the functions that promise significant workflow automation but require 100% accuracy and human judgment.

MCP Sampling for Human Approval

FastMCP is one of the few libraries who provides a sampling mechanism.

While FastMCP's sampling feature was originally designed to request LLM completions from clients, it can be cleverly repurposed to implement human approval workflows. This creative use of the sampling mechanism transforms what was meant for AI text generation into a powerful human-in-the-loop system.

Core Sampling Architecture

The Context.sample() method was designed to send prompts to client-side LLMs for text generation. However, by implementing custom sampling handlers on the client side, you can intercept these "sampling" requests and route them to human operators instead of AI models.

Server-side Approval Request

Here's how the pattern works in practice. On the server side, a tool can request "approval" by using the sampling mechanism:

@mcp.tool() async def make_payment(amount: float, ctx: Context) -> str: await ctx.info(f"message from client: {amount}", logger_name="LLMSamplingDemo") result = await ctx.sample(f"Confirm payment of : {amount}") if result.text == "yes": await ctx.info(f"payment of {amount} confirmed", logger_name="LLMSamplingDemo") return "payment successful" else: await ctx.info(f"payment of {amount} rejected", logger_name="LLMSamplingDemo") return "payment rejected"

The server tool calls ctx.sample() with a confirmation prompt, expecting a human response rather than an LLM completion.

Client-Side Approval Mechanisms

On the client side, instead of connecting to an LLM, you implement a custom SamplingHandler that presents the request to a human operator.

The sampling callback system transforms handler responses into proper MCP message results, ensuring compatibility while maintaining flexibility for approval workflows.

from fastmcp.client.sampling import RequestContext, SamplingMessage, SamplingParams async def human_approval( messages: list[SamplingMessage], params: SamplingParams, context: RequestContext ): last_message = messages[-1] if messages else None if last_message and hasattr(last_message.content, "text"): approval_request = last_message.content.text else: approval_request = "Approval requested" print(approval_request) while True: response = input("Your response: ").strip() if response: break print("Please provide a response.") return response async def main(): client = Client( "server_sampling.py", sampling_handler=human_approval, log_handler=log_handler, ) async with client: result = await client.call_tool("make_payment", {"amount": 1000}) print("result:", result[0].text)

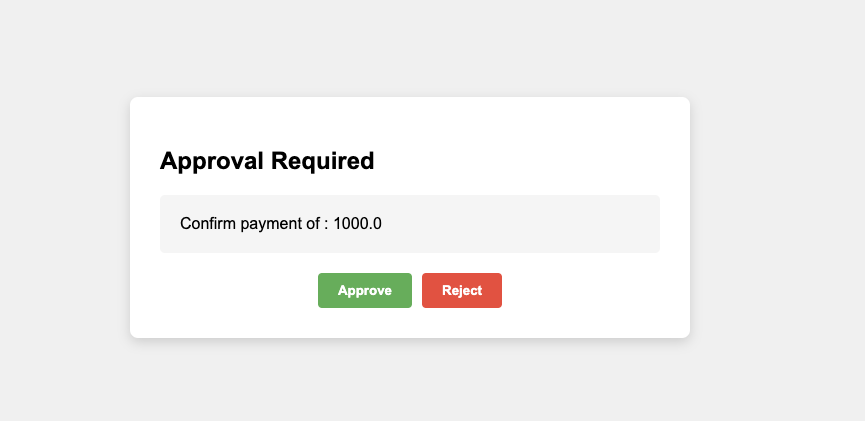

You can create simple UI based approval mechanisms by implementing handler

Why it works?

FastMCP's sampling architecture naturally supports human approval patterns through:

- Protocol Compatibility: The server uses standard ctx.sample() calls, maintaining MCP protocol compliance

- Flexible Routing: Client-side handlers can route to humans, LLMs, or hybrid approval systems

- Context Preservation: The RequestContext maintains session state during approval workflows

- Error Handling : Built-in error handling ensures graceful degradation when approval is denied

The beauty of this approach is that it transforms FastMCP's sampling mechanism from a simple LLM interface into a flexible human approval system, demonstrating the framework's adaptability beyond its original design intent.

Practical Applications

This sampling-with-approval pattern enables sophisticated use cases including:

- Content moderation before LLM generation

- Sensitive data handling with human oversight

- Multi-step workflows requiring approval gates

- Audit trails for AI-assisted operations

Key Benefits

Enhanced Security: Critical operations require explicit human approval, preventing unauthorized actions and tool injection attacks.

Complete Audit Trail: All approval requests are logged with timestamps and context, providing full accountability.

Flexible Implementation: The system supports different approval workflows - CLI interfaces, GUI applications, or web-based systems.

Seamless Integration: Works with existing FastMCP tools and resources without requiring architectural changes.

The Shift in Human-AI Collaboration

As AI capabilities expand, the bottleneck shifts from AI generation to human judgment. AI can produce thousands of marketing pieces per hour, but humans determine which content meets quality standards. AI can draft legal documents, but lawyers provide the critical judgment to identify overgeneralizations and potential errors.

This shift represents a fundamental change in how we think about AI integration. The value doesn't come from replacing human decision-making but from elevating humans to focus on high-level judgment while AI handles execution.

Future Developments

The MCP specification is evolving to include formal "Elicitation" protocols for human-in-the-loop workflows. While these developments are promising, LLM sampling provides an immediate, practical solution for implementing human oversight in AI systems.

Looking ahead, several enhancements could further strengthen human-in-the-loop capabilities:

- Fine-Grained Policy Layers: Dynamic rules to determine when and how sampling should trigger based on user roles or data classification.

- Real-Time Collaboration: Co-piloting approval workflows where multiple stakeholders can annotate or veto AI actions in real time.

- LLM Self-Auditing: Models explain their own reasoning before requesting approval—helping humans make informed decisions faster.

Why This Matters for Your Business

Organizations considering AI implementation while maintaining operational control will find human-in-the-loop systems provide an optimal balance. These systems deliver AI automation efficiency while preserving complete oversight of critical decisions.

This approach proves particularly valuable for businesses requiring AI to handle high-stakes operations like customer communications, financial transactions, or data management. Rather than choosing between full automation and manual processes, organizations can implement controlled automation.

For businesses ready to explore safe, controlled AI automation, human-in-the-loop systems using MCP sampling offer a practical path forward. These systems transform workflows while maintaining the security and oversight requirements that enterprise environments demand.

Ready to implement human-in-the-loop AI systems in your organization? Contact me to discuss how we can build secure, controllable AI solutions tailored to your business needs.